The use of peer review or journal name as indicators of trust is problematic. We advocate for a broader range of trust indicators.

The problem

Academia over-relies on a single marker of trust; peer review. However, considerable evidence now exists that demonstrates significant failures in peer review that are only getting worse with increasing workloads and a diminishing reviewer pool. Worse still, AI and paper mills have exacerbated the problems whilst further highlighting the deficiencies in the peer review process.

The solution

To restore trust in research outputs, we must focus on a wider range of trust indicators. These are vital for readers and users of research. They can also encourage more rigorous and transparent research practices.

Some initial examples of similar efforts include the bioRxiv toolbar and the publication facts label from PKP.

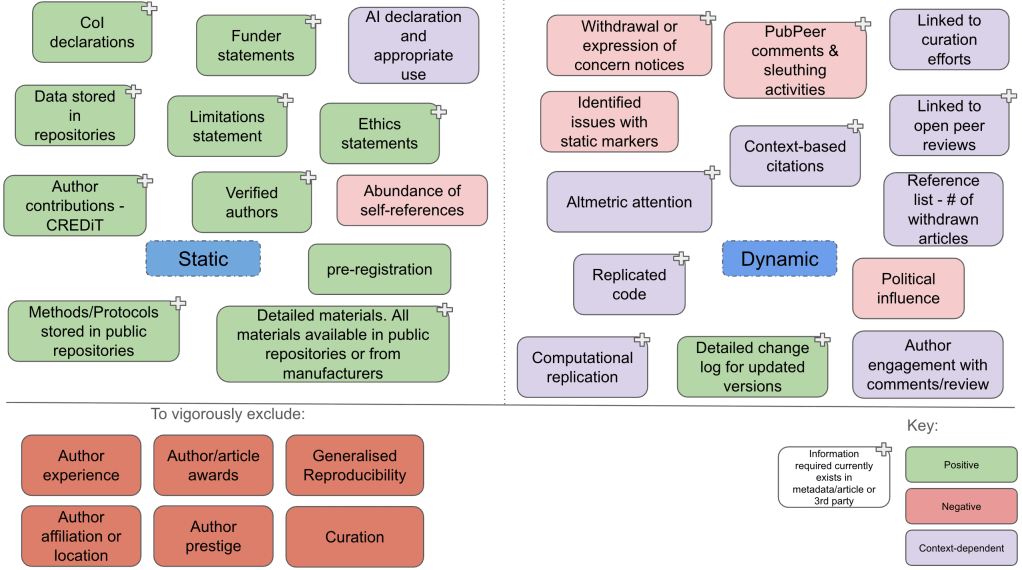

We propose a combination of static and dynamic trust indicators, to incentivise high standards whilst collating post-publication feedback, discussion and use. Static trust indicators are designed to encourage and reward rigorous and open research practices. Dynamic signals are designed to focus on the research itself rather than poor proxies and to help inform readers.

How are we achieving this?

UI design & prototyping

Trust indicator plugin

Guides & training

Trust Indicators

| Trust indicator (S = Static, D = Dynamic) | Definition | Examples |

| Funder statement (S) | Statement outlining who funded the work, which authors the funding is attached to, and any associated identifying grant numbers. | These statements are often already found in research papers |

| Conflict of interest statement (S) | Statement declaring any conflict of interest for any author or funder associated with the work. | These statements are often already found in research papers |

| Ethics statement (S) | Statement outlining any ethical clearance required for the study and including details consistent with best practices. | These statements are often already found in research papers |

| Limitations statement (S) | Output includes a genuine statement of the studies’ limitations and outlines where conclusions are less confident. | These statements are increasingly found in research papers |

| Verified authors (S) | Authors are verifiable as real people who would reasonably author the work. This should not be restricted to authors with academic affiliations to avoid bias and inequality. | ORCiD |

| Author contributions (S) | Clearly defined contributions for each author. | CRediT |

| Data stored in repositories (S) | All data included in an output should be stored in independent repositories and adhere to best practices and FAIR principles. All links should resolve correctly. | Zenodo, GEO, DRYAD, Figshare |

| Methods/Protocols (S) | All protocols used for a study should be deposited into independent repositories and provide full detail for replication. | STAR methods, Protocols.io |

| Material deposition (S) | All materials used in a study should be purchasable from a manufacturer or deposited in an appropriate repository, available for reuse without conditions. | STAR materials/methods, AddGene |

| AI use & declaration (S) | Any use of AI should be transparently disclosed, in line with other methods. Appropriate use and disclosure shouldn’t harm trust, however lack of declaration or inappropriate use should reduce trust. | |

| Detailed change log (D) | Any updates should be accompanied by a detailed change log to convey what is new or has been altered | Study registries |

| Linking to curation efforts (D) | If an output is curated this could be a positive, if the curation service has taken additional checks or steps to confirm that the output conforms to best practices for that field. For example, an overlay journal may undertake additional steps prior to curating an article. | |

| Withdrawal or expression of concern notices (D) | If an output is withdrawn this removes any sense of trustworthiness. Additionally, formal expressions of concern should reduce trustworthiness. | Journal retractions |

| Pubpeer or sleuthing comments (D) | Post-publication investigations of an output that lead to identification of issues should reduce trustworthiness. However, an output that undergoes detailed interrogation without raising issues should increase in trustworthiness. | PubPeer, Sleuth blog posts/social media comments |

| Links to open peer review (D) | Associated transparent peer reviews should add or detract from trustworthiness in a context-dependent manner. Peer review may be pre- or post- publication. Peer review is not infallible and should not be prioritised over other signals. | PREreview, Rapid Reviews, Review Commons |

| Citations (D) | How an output is used by the research community provides important insights into the reliability of the findings. However, it is vital that citation data is context-dependent to add or detract from the trustworthiness. | Scite.ai |

| Altmetric attention (D) | Altmetric attention relates to the online discussions of an output. With context, this could add or detract from trustworthiness. | Altmetric |

| Author engagement with comments or review (D) | Authors who do not constructively engage with review or expressions of concern should be a warning against trustworthiness of an output. Alternatively, good-faith interactions should be rewarded and increase the trust in an output. | |

| Computational reproducibility (D) | Any code used in an output should be available, reusable, and computationally reproduce results. Successful reproduction of code would increase trust in the original output. | GitHub |

| Issues identified with static indicators (D) | If any issues are discovered with the static trust signals (for example that they are fraudulent) this reduces trust in the original output. |