It’s peer review week and this years theme is “rethinking peer review in the AI era”.

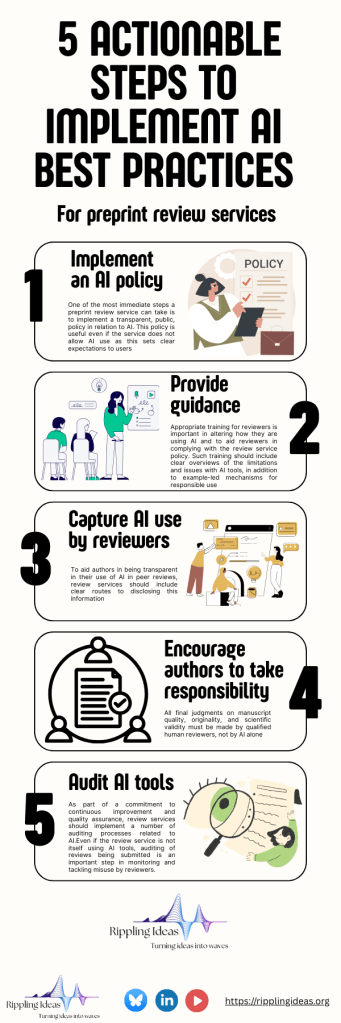

To contribute to this theme, we have designed a series of best practices for preprint review services using AI/LLMs. These best practices to support the responsible and transparent use of such tools.

Who is this for?

The best practices are designed for preprint review services, preprint evaluation services and platforms hosting preprint reviews.

The guidelines do not object to the use of AI but help to ensure responsible and transparent use. A separate set of best practices are focussed on the authors of preprint reviews/evaluations.

Guiding Principles

- Transparency: AI involvement in reviews must be openly disclosed to all parties, including details of tool capabilities, limitations, and approved uses.

- Human Oversight: Qualified human reviewers must make all final decisions; AI tools should merely support, not replace, expert assessment.

- Security and Confidentiality: All AI tools must protect data privacy, and third-party tools need to meet platform standards.

- Quality and Integrity: AI may assist with tasks such as pre-screening and summarizing, but findings must be verified by humans and reviewer accountability is key.

- Ethical and Unbiased Review: Review services must monitor and mitigate any bias introduced by AI and ensure equitable treatment of all authors.

Implementation Guidelines

- Establish public AI policies detailing acceptable tools and uses, as well as disclosure and auditing procedures.

- Include metadata fields and confirmation checkboxes in review forms to ensure reviewers declare and own their use of AI tools.

- Provide targeted training and guidance for reviewers and staff on responsible AI use and policy compliance.

- Audit AI tools and review outputs regularly for compliance, bias, and misuse.

Check out our actionable steps to implementing these best practices